|

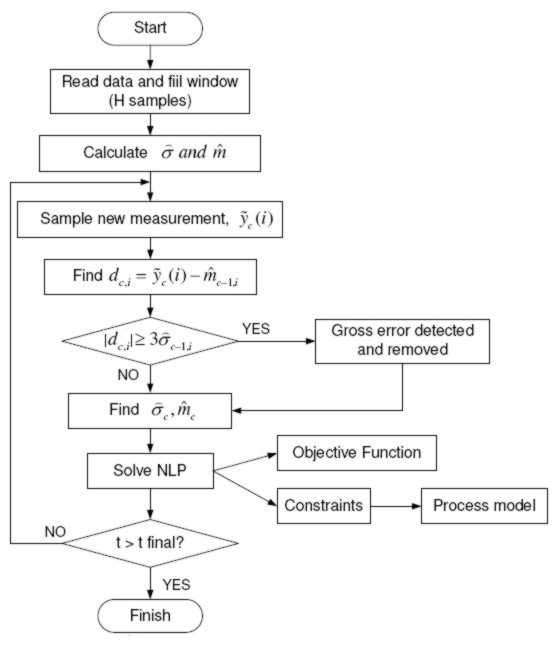

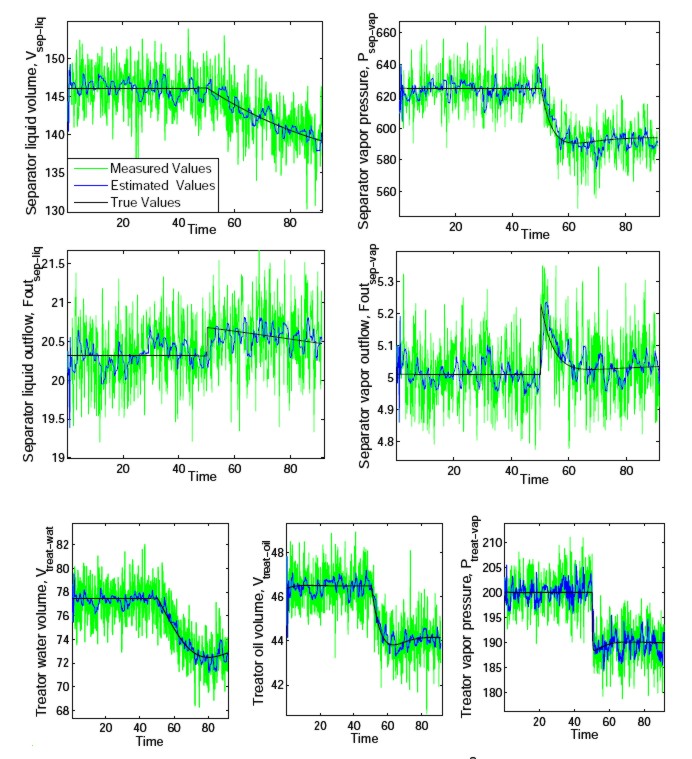

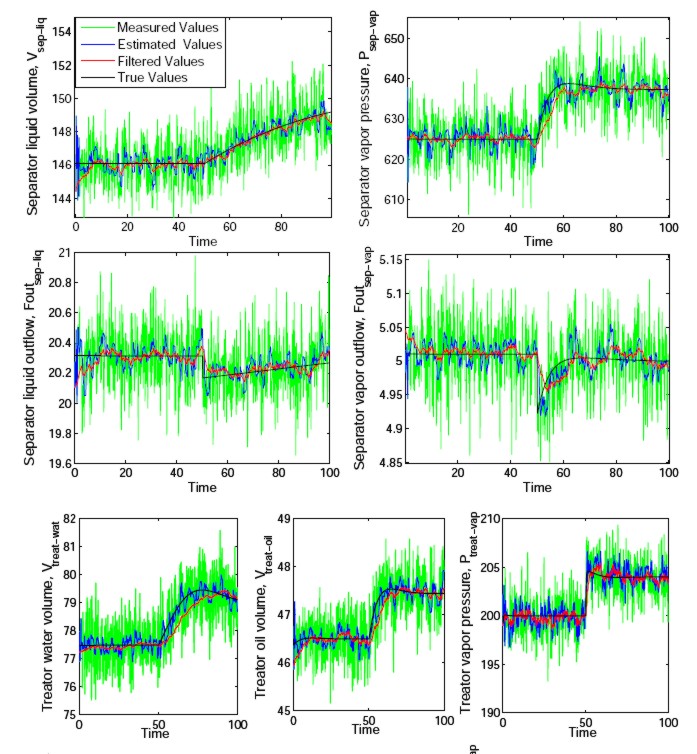

UNB Nonlinear Dynamic Data Reconciliation Agent The core functionality of UNB's Nonlinear Dynamic Data Reconciliation (NDDR) Agent is reducing noise levels on the sensed variables received from the wireless sensor network (WSN) while enforcing material and energy balance in the process being monitored. NDDR is thus substantially different from (and superior to) low-pass filtering, since the latter does not account for physical constraints. A secondary objective is to detect and correct "gross errors" in the data, which may be due to momentary sensor malfunction, faulty analog-to-digital conversion, or data drop-out, for example. The most direct way to carry out NDDR is to determine process variable estimates that minimize the error between the noisy signals and the result of process simulation using a realistic, physics-based dynamic model. Specifically, as shown in Fig. 1, noisy process input and output measurements are taken over a time window of H samples, and an optimization algorithm (nonlinear programming, NLP) is used to adjust estimated system inputs and outputs from a process simulation until the mean squared error between the noisy signals and estimates is minimized. The last (c = current) estimates are retained, the next samples (c + 1) are taken, and the process is repeated. The noise standard deviations σ and the variable means m are also estimated, for use in detecting gross errors; if a gross error is detected via a 3σ test, then the erroneous data point is replaced with the previous (c - 1) value so it does not skew the NDDR process.  Figure 1. NDDR Agent Schematic The first development phase for our NDDR Agent carried out these activities using a realistic mathematical model of a jacketed continuous stirred tank reactor (JCSTR) with two control loops, one for level and the other for temperature. The variables being reconciled were process inputs (flow rate and temperature of the stream entering the tank, flow rate and temperature of the stream flowing through the jacket) and process outputs (fluid level in the tank, fluid temperature in the tank and temperature of the fluid in the jacket). Mazyar Laylabadi's MScEng research using this model focussed on making NDDR adaptive, by estimating noise statistics for use in the optimization criterion, and adding gross error detection and replacement (GEDR). Specifically, he developed MATLAB code for batch processing of simulation data produced using the JCSTR model, adding adaptive features plus GEDR to the basic NDDR approach. Pilar Moreno continued this development, extending and robustifying MATLAB code for a true NDDR Agent that could process data from the pilot plant model, in (computer) real time. Several challenges were addressed in this work, most importantly solving the NDDR problem when a physics-based nonlinear dynamic model of the process is not available, and handling a substantially more realistic and complicated system such as the pilot plant, which has five control loops and a large range of time constants. An example of the NDDR Agent's performance processing noisy signals from the pilot plant is depicted in Fig. 2.  Figure 2. NDDR Agent Performance Figure 3 shows a comparison of NDDR with low-pass filtering (LPF) where the bandwidth of the low-pass filter is adjusted to produce a similar reduction in noise standard deviation. While LPF seems to reduce the noise level somewhat more than NDDR note that LPF introduces dynamic lags in the results which increase the noise σ to approximate that of NDDR. These artificial filter lags do not represent the process dynamics, so they must be considered to be detrimental in this context.  Figure 3. Comparison of NDDR and LPF Performance This part of the UNB PAWS effort is completed and delivered (Miss Moreno's MScEng thesis was successfully defended in January 2010). Return to the UNB PAWS Home Page Information supplied by: Jim Taylor Last update: 17 December 2009 Email requests for further information to: Jim Taylor (jtaylor@unb.ca) |